LLM From Scratch #1 — What is an LLM? Your Beginner’s Guide

Well hey everyone, welcome to this LLM from scratch series! :D

I’m excited to announce that I’m starting this series! I’ve decided to focus on “LLMs from scratch,” where we’ll explore how to build your own LLM. 😗 I will do my best to teach you all the math and everything else involved, starting from the very basics.

Now, some of you might be wondering about the prerequisites for this course. The prerequisites are:

1. Basic Python

2. Some Math Knowledge

3. Understanding of Neural Networks.

4. Familiarity with RNNs or NLP (Natural Language Processing) is helpful, but not required.

If you already have some background in these areas, you’ll be in a great position to follow along. But even if you don’t, please stick with the series! I will try my best to explain each topic clearly. And Yes, this series might take some time to complete, but I truly believe it will be worth it in the end.

So, let’s get started!

Let’s start with the most basic question: What is a Large Language Model?

Well, you can say a Large Language Model is something that can understand, generate, and respond to human-like text.

For example, if I go to chat.openai.com (ChatGPT) and ask, “Who is the prime minister of India?”

It will give me the answer that it is Narendra Modi. This means it understands what I asked and generated a response to it.

To be more specific, a Large Language Model is a type of neural network that helps it understand, generate, and respond to human-like text (check the image above). And it’s trained on a very, very, very large amount of data.

Now, if you’re curious about what a neural network is…

A neural network is a method in machine learning that teaches computers to process data or learn from data in a way inspired by the human brain. (See the “This is how a neural network looks” section in the image above)

And wait! If you’re getting confused by different terms like “machine learning,” “deep learning,” and all that…

Don’t worry, we will cover those too! Just hang tight with me. Remember, this is the first part of this series, so we are keeping things basic for now.

Now, let’s move on to the second thing: LLMs vs. Earlier NLP Models. As you know, LLMs have kind of revolutionized NLP tasks.

Earlier language models weren’t able to do things like write an email based on custom instructions. That’s a task that’s quite easy for modern LLMs.

To explain further, before LLMs, we had to create different NLP models for each specific task. For example, we needed separate models for:

- Sentiment Analysis (understanding if text is positive, negative, or neutral)

- Language translation (like English to Hindi)

- Email filters (to identify spam vs. non-spam)

- Named entity recognition (identifying people, organizations, locations in text)

- Summarization (creating shorter versions of longer texts)

- …and many other tasks!

But now, a single LLM can easily perform all of these tasks, and many more!

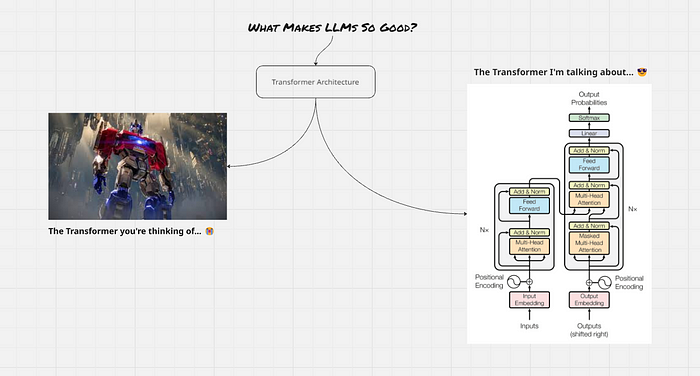

Now, you’re probably thinking: What makes LLMs so much better?

Well, the “secret sauce” that makes LLMs work so well lies in the Transformer architecture. This architecture was introduced in a famous research paper called “Attention is All You Need.” Now, that paper can be quite challenging to read and understand at first. But don’t worry, in a future part of this series, we will explore this paper and the Transformer architecture in detail.

I’m sure some of you are looking at terms like “input embedding,” “positional encoding,” “multi-head attention,” and feeling a bit confused right now. But please don’t worry! I promise I will explain all of these concepts to you as we go.

Remember earlier, I promised to tell you about the difference between Artificial Intelligence, Machine Learning, Deep Learning, Generative AI, and LLMs?

Well, I think we’ve reached a good point in our post to understand these terms. Let’s dive in!

As you can see in the image, the broadest term is Artificial Intelligence. Then, Machine Learning is a subset of Artificial Intelligence. Deep Learning is a subset of Machine Learning. And finally, Large Language Models are a subset of Deep Learning. Think of it like nesting dolls, with each smaller doll fitting inside a larger one.

The above image gives you a general overview of how these terms relate to each other. Now, let’s look at the literal meaning of each one in more detail:

- Artificial intelligence (AI): Artificial Intelligence is a field of computer science that focuses on creating machines capable of performing tasks that typically require human intelligence. This includes abilities like learning, problem-solving, decision-making, and understanding natural language. AI achieves this by using algorithms and data to mimic human cognitive functions. This allows computers to analyze information, recognize patterns, and make predictions or take actions without needing explicit human programming for every single situation.

In simpler words, you can think of Artificial Intelligence as making computers “smart.” It’s like teaching a computer to think and learn in a way that’s similar to how humans do. Instead of just following pre-set instructions, AI enables computers to figure things out on their own, solve problems, and make decisions based on the information they have. This helps them perform tasks like understanding spoken language, recognizing images, or even playing complex games effectively. - Machine Learning (ML): It is a branch of Artificial Intelligence that focuses on teaching computers to learn from data without being explicitly programmed. Instead of giving computers step-by-step instructions, you provide Machine Learning algorithms with data. These algorithms then learn patterns from the data and use those patterns to make predictions or decisions. A good example is a spam filter that learns to recognize junk emails by analyzing patterns in your inbox.

- Deep Learning (DL): It is a more advanced type of Machine Learning that uses complex, multi-layered neural networks. These neural networks are inspired by the structure of the human brain. This complex structure allows Deep Learning models to automatically learn very intricate features directly from vast amounts of data. This makes Deep Learning particularly powerful for complex tasks like facial recognition or understanding speech, tasks that traditional Machine Learning methods might struggle with because they often require manually defined features. Essentially, Deep Learning is a specialized and more powerful tool within the broader field of Machine Learning, and it excels at handling complex tasks with large datasets.

- Large Language Models: As we defined earlier, a Large Language Model is a type of neural network designed to understand, generate, and respond to human-like text.

- Generative AI is a type of Artificial Intelligence that uses deep neural networks to create new content. This content can be in various forms, such as images, text, videos, and more. The key idea is that Generative AI generates new things, rather than just analyzing or classifying existing data. What’s really interesting is that you can often use natural language — the way you normally speak or write — to tell Generative AI what to create. For example, if you type “create a picture of a dog” in tools like DALL-E or Midjourney, Generative AI will understand your natural language request and generate a completely new image of a dog for you.

Now, for the last section of today’s blog: Applications of Large Language Models (I know you probably already know some, but I still wanted to mention them!)

Here are just a few examples:

- Chatbot and Virtual Assistants.

- Machine Translation

- Sentiment Analysis

- Content Creation

- … and many more!

Well, I think that’s it for today! This first part was just an introduction. I’m planning for our next blog post to be about pre-training and fine-tuning. We’ll start with a high-level overview to visualize the process, and then we’ll discuss the stages of building an LLM. After that, we will really start building and coding! We’ll begin with tokenizers, then move on to BPE (Byte Pair Encoding), data loaders, and much more.

Regarding posting frequency, I’m not entirely sure yet. Writing just this blog post today took me around 3–4 hours (including all the distractions, lol!). But I’ll see what I can do. My goal is to deliver at least one blog post each day.

So yeah, if you are reading this, thank you so much! And if you have any doubts or questions, please feel free to leave a comment or ask me on Telegram: @omunaman. No problem at all — just keep learning, keep enjoying, and thank you!